Kick-off meeting

The kick-off meeting for the project AI-SPEAK took place on January 31 2024 in Novi Sad, with online participation of external collaborators. Participating teams and representatives of external collaborators and subcontractors presented their institutions with the focus on their participation on the project. Suitable multimodal corpora were discussed, in terms of the communication context, format, as well as possible scenarios of use. Further steps were agreed upon, related to the acquisition of corpora according to the project plan, as well as directions of future research, particularly with respect to the recent breakthroughs in the area of machine learning.

Activities in the period M1-M3

Our activities in the first three months included studying the state of research in the field as well as preparatory definition of the characteristics of multimodal (audio/video) corpora on which research would be conducted in the upcoming period. Important activities in this period included studying the characteristics of existing multimodal corpora from the perspective of their usability in the development of human-machine multimodal communication systems. In this period we have also procured most of the equipment needed for successful realization of project activities, most notably all equipment needed for recording the AI-SPEAK multimodal speech database in the anechoic chamber of the University of Novi Sad, obtained within the Erasmus+ project SENVIBE (598241-EPP-1-2018-1-RS-EPPKA2-CBHE-JP). We have also published two scientific papers, available in the Publications section.

Activities in the period M4-M6

Our activities in the second trimester of the project included the definition of detailed implementation, dissemination and quality control plans, available in the Publications section. Furthermore, it included preliminary work on the multimodal speech corpus RUSAVIC (Russian Audio-Visual Speech in Cars), obtained in cooperation with the The St. Petersburg Institute for Informatics and Automation of the Russian Academy of Sciences (SPIIRAS), as well as preliminary exploration of several other research paths. Using the recording equipment obtained in the previous trimester we have started the production of the AI-SPEAK speech database in the anechoic chamber of the University of Novi Sad, according to diverse text scripts we have created according to the project proposal and corresponding video-prompts created for this purpose, for each speaker individually. A pipeline was established to paralellize the processes of script production, recording and revision/postprocessing of the obtained material. During this trimester we have started with our involvement in the organizational activities related to this year's edition of the international conference Speech and Computer (SPECOM), which will take place in November 2024 in Belgrade.

Activities in the period M7-M9

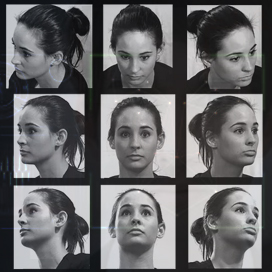

Our activities in the third trimester included the completion of the recording phase of the AI-SPEAK multimodal speech corpus, as well as the collection of the Internet speech corpus, according to the project proposal. Processing and labelling of both speech corpora have started during this trimester, and we also have also extended our research activities mainly in the domain of multimodal speech recognition as well as recognition of speech from video only (also referred to as lip-reading). Procurement of equipment has also been finished during this period, and we also intensified our organisational activities related to this year's edition of the international conference Speech and Computer (SPECOM) in Belgrade.

Activities in the period M10-M12

Most of our activities in the fourth trimester included the organization and participation at the international conference Speech and Computer (SPECOM), which took place from 25th to 28th November 2024 in Belgrade. This event was co-organized with the St. Petersburg Institute for Informatics and Automation of the Russian Academy of Sciences (SPIIRAS). Each year the conference offers a comprehensive technical program presenting all the latest developments in research and technology for speech processing and its applications. The two general chairs of the conference were prof. Vlado Delić, AI-SPEAK team member, and prof. Alexei Karpov, AI-SPEAK external collaborator. Other activities at the project included continued work on the processing of AI-SPEAK and Internet speech corpora for multimodal speech recognition and synthesis.

Activities in the period M13-M15

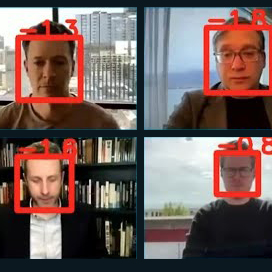

In the fifth quarter, we continued work on speech recognition based on silent video recordings ("lip reading") and expanded activities to include the development of models for audio-visual speech recognition. During this period, we also continued processing the AI-SPEAK speech corpus, after systematically identifying problems and common errors in the recorded material. Additionally, continuous work on collecting the Internet speech corpus progressed, and appropriate software tools were developed for its processing. Namely, recordings obtained from the internet offer a low level of control over their content, and often feature scenes where speakers alternate in the frame, making it necessary to automatically identify the presence of a specific speaker, both spatially and temporally. During this period, some of our previous research was published in a paper in the prestigious scientific journal Mathematics, categorized as M21a.

Activities in the period M16-M18

The sixth quarter of the project was marked by the final phase of preparations for the AI-SPEAK speech corpus and VideoBase (AI-SPEAK Internet Speech Corpus). The AI-SPEAK corpus is publicly available to the research community and contains recordings of 30 speakers, each uttering 80 sentences in Serbian and English (30 sentences shared among all speakers and 50 unique to each speaker). In addition to audio recordings of speech, this corpus also includes video recordings of the lip region of the speakers, captured from multiple angles. The second corpus, VideoBase (AI-SPEAK Internet Speech Corpus), consists of 2,400 unique video recordings of people speaking in various situations (in studios or outside), with a total duration exceeding 1,300 hours. All recordings are in high resolution (1920x1080 pixels) at 25 frames per second.